Comparing the accuracy and effectiveness of Wordvice AI Proofreader to two automated editing tools and human editors

Article information

Abstract

Purpose

Wordvice AI Proofreader is a recently developed web-based artificial intelligence-driven text processor that provides real-time automated proofreading and editing of user-input text. It aims to compare its accuracy and effectiveness to expert proofreading by human editors and two other popular proofreading applications—automated writing analysis tools of Google Docs, and Microsoft Word. Because this tool was primarily designed for use by academic authors to proofread their manuscript drafts, the comparison of this tool’s efficacy to other tools was intended to establish the usefulness of this particular field for these authors.

Methods

We performed a comparative analysis of proofreading completed by the Wordvice AI Proofreader, by experienced human academic editors, and by two other popular proofreading applications. The number of errors accurately reported and the overall usefulness of the vocabulary suggestions was measured using a General Language Evaluation Understanding metric and open dataset comparisons.

Results

In the majority of texts analyzed, the Wordvice AI Proofreader achieved performance levels at or near that of the human editors, identifying similar errors and offering comparable suggestions in the majority of sample passages. The Wordvice AI Proofreader also had higher performance and greater consistency than that of the other two proofreading applications evaluated.

Conclusion

We found that the overall functionality of the Wordvice artificial intelligence proofreading tool is comparable to that of a human proofreader and equal or superior to that of two other programs with built-in automated writing evaluation proofreaders used by tens of millions of users: Google Docs and Microsoft Word.

Introduction

Background/rationale: The use of English in all areas of academic publishing and the need for nearly all non-native English-speaking researchers to compose research studies in English have created difficulties for non-native English speakers worldwide attempting to publish their work in international journals. Faced with the time-consuming process of self-editing before submission to journals, many researchers are now using Automated Writing Analysis tools to edit their work and enhance their academic writing development [1,2]. These include grammatical error correction (GEC) programs that automatically identify and correct objective errors in text entered by the user. At the time of this study, most popular GEC tools are branded automated English proofreading programs that include Grammarly [3], Ginger Grammar Checker [4], and Hemingway Editor [5], all of which were developed using natural language processing (NLP) techniques; NLP is a type of artificial intelligence (AI) technology that allows computers to interpret and understand text in the same way a human does.

Although these AI writing and proofreading programs continue to grow in popularity, reviews regarding the effectiveness of these programs at large are inconsistent. Similar studies to the present one have analyzed the effectiveness of NLP text editors and their potential to approach the level revision of expert human proofreading [6-8]. At least one 2016 article [9] evaluates popular GEC tools and comes to the terse conclusion that “grammar checkers do not work.” The jury appears to be out on the overall usefulness of modern GEC programs in correcting writing.

However, Napoles et al. [10] propose applying the Generalized Language Evaluation Understanding (GLEU) metric, a variant of the Bilingual Evaluation Understudy (BLEU) algorithm that “accounts for both the source and the reference” text, to establish a ground truth ranking that is rooted in judgements by human editors. Similarly, the present study applies a GLEU metric to more accurately compare the accuracy of these automated proofreading tools with that of revision by human editors. While the practical application of many of these programs is evidenced by their success in the marketplace of writing and proofreading aids, gaps remain in how accurate and consistent certain AI proofreading programs are in correcting grammatical and spelling errors.

Objectives: It aimed to analyze the effectiveness of the Wordvice AI Proofreader [11], a web-based AI-driven text processor that provides real-time automated proofreading and editing of user-input text. We also compared its effectiveness to expert proofreading by human editors and two other popular writing tools with proofreading and grammar checking applications, Google Docs [12] and Microsoft (MS) Word [13].

Methods

Ethics statement: This is not a human subject study. Therefore, neither approval by the institutional review board nor the obtainment of informed consent is required.

Study design: This was a comparative study using qualitative open dataset and quantitative GLEU metric of comparison.

Setting: The Wordvice AI Proofreader tool was measured in terms of its ability to identify and correct objective errors, and it was evaluated by comparing its performance to that of experienced human proofreaders and to two other commercial AI writing assistant tools with proofreading features: MS Word and Google Docs in June 2021. By combining the application of a quantitative GLEU metric with a qualitative open-dataset comparison, this study compared the effectiveness of the Wordvice AI Proofreader with that of other editing methods, both in the correction of “objective errors” (grammar, punctuation, and spelling) and in the identification and correction of more “subjective” stylistic issues (including weak academic language and terms).

Data sources

Open datasets

The performance of the Wordvice AI Proofreader was measured using the JHU FLuency-Extended GUG (JFLEG) open dataset 1 [14], a dataset developed by researchers as Johns Hopkins University and consisting of a total of 1,501 sentences, 800 of which were used to comprise Dataset 1 in the experiment (https://github.com/keisks/jfleg). The JFLEG data consists of sentence pairs, showing the input text and the results of proofreading by professional editors. These datasets assess improvements in sentence fluency (style revisions), rather than recording all objective error corrections. According to Sakaguchi et al. [15], unnatural sentences can result when the annotator collects only the minimum revision data within a range of error types, and letting the annotator rephrase or rewrite a given sentence can result in more comprehensible and natural sentences. Thus, the JFLEG data was applied with the aim of assessing improvements in textual fluency rather than simple grammatical correction.

Because many research authors using automated writing assistant tools are English as a second language writers, the proofread data was based on sentences written by non-native English speakers. This was designed to create a more accurate sample pool for likely users of the AI Proofreader. “Proofread data” refers to data that has been corrected by professional native speakers with master’s and doctoral degrees in the academic domain. The data were constructed in pairs: sentence before receiving proofreading and sentence after receiving proofreading.

The sample data used in the experiment consisted of 1,245 sentences (i.e., 1,245 pairs of sentences were assessed both before and after proofreading), and these sentences were derived from eight academic domains: arts and humanities, biosciences, business and economics, computer science and mathematics, engineering and technology, medicine, physical sciences, and social sciences. Table 1 summarizes the number of sentences applied from each academic domain (Dataset 2).

GLEU-derived datasets

The GLEU metric was used to create four datasets of comparison. The first dataset (Dataset 3), GLEU 1 (T1, P1), compares the correctness of the output sentence text of the Wordvice AI Proofreader (“predicted sentence,” P1) with that of human proofreaders (“ground truth sentence,” T1). The second dataset (Dataset 4), GLEU 2 (T1, P2), compares the correctness of the Wordvice AI Proofreader’s predicted sentence (P1). The third dataset (Dataset 5), GLEU 3 (T1, P2), compares the correctness of MS Word’s predicted sentence (P2). The fourth dataset (Dataset 6), GLEU 4 (T1, P3), compares the correctness of Google Doc’s predicted sentence (P4).

Measurement (evaluation metrics)

Error type comparison

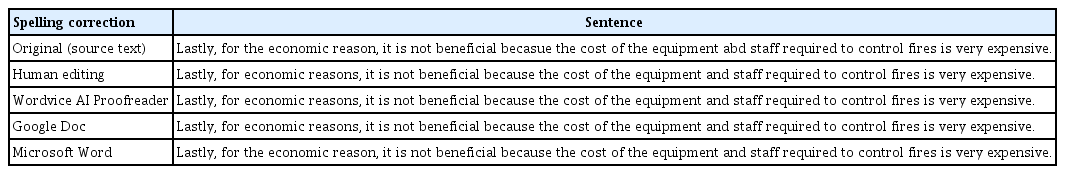

A qualitative comparison was performed on T1, P1, P2, and P3 for categories including stylistic improvement (fluency, vocabulary) and objective errors (determiner/article correction, spell correction). Table 2 illustrates these details for each writing correction method (human proofreading, Wordvice AI, MS Word, and Google Docs).

Comparison of the corrections and improvements of the sentences before correction, the sentences after correction of the comparative methods, and the sentences after the correction by Wordvice AI Proofreader

A GLEU metric [16] was used to evaluate the performance of all proofreading types (T1, P1, P2, and P3). GLEU is an indicator based on the BLEU metric [17] and measures the number of overlapping words by comparing ground truth sentences and predicted sentences with n-gram to assign high scores to sequence words. To calculate GLEU score, we record all sub-sequences of 1, 2, 3, or 4 tokens in a given predicted and ground truth sentence. We then compute a recall (Equation 1), which is the ratio of the number of matching n-grams to the number of total n-grams in the ground truth sentence; we also compute a precision (Equation 2), which is the ratio of the number of matching n-grams to the number of total ngrams in the predicted sequence [18]. Python library (https://www.nltk.org/_modules/nltk/translate/gleu_score.html) was used for the calculation of GLEU.

The GLEU score is then simply the minimum of recall and precision. This GLEU score’s range is always between 0 (no matches) and 1 (complete match). As with the BLEU metric, the higher the GLEU score, the higher the percentage of identified and corrected errors and issues captured by the proofreading tool. These are expressed as a percentage of the total revisions applied in the ground truth model (human-edited text), including objective errors and stylistic issues. The closer to the ground truth editing results, the higher the performance score and the better the editing quality.

Statistical methods: Descriptive statistics were applied for comparison between the target program and other editing tools.

Results

Quantitative results based on GLEU

Comparison of all automated writing evaluation proofreaders

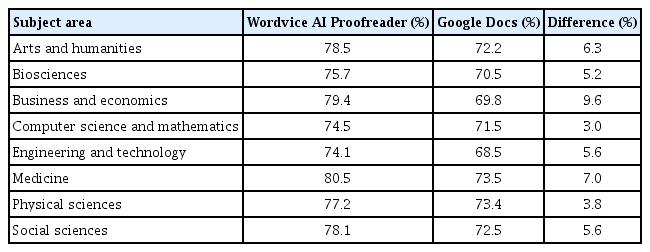

Table 3 shows the average GLEU score in terms of percentages of corrections made by the Wordvice AI Proofreader and other automated proofreading tools as compared to the ground truth sentences. As an average of total corrections made, the Wordvice AI Proofreader had the highest erformance of the Automated Writing Analysis proofreading tools, performing 77% of the corrections applied by the human editor.

Percentage of appropriate corrections of all automated proofreaders compared to ground truth sentence (100% correct)

Based on the dataset of 1,245 sentences used in the experiment, the proofreading performance of Wordvice AI achieved a maximum of 11.2%P and a minimum of 3.0%P compared to those of Google Doc’s proofreader. Additionally, the GLEU score of the Wordvice AI-revised text was higher by 13.0%P at maximum on average compared to sentences before proof-reading.

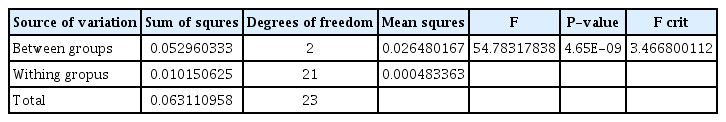

Analysis of variance was used to determine the statistical significance of the values. Comparisons made between Wordvice AI, Google Docs, and MS Word proofreading tools revealed a statistically significant difference in proofreading performance (analysis of variance, P < 0.05) (Table 4).

Comparison of Wordvice AI Proofreader and Google Docs proofreading tool

Google Docs proofreader’s results scored second in total corrections. Our comparative method confirmed that the deviation of Wordvice AI performance was smaller than that of the performance of Google Docs and MS Word proofreaders.

The proofreading performance of Wordvice AI (with a variation of 5.4%) was more consistent in terms of percentage of errors corrected compared to MS Word (with a variation of 5.6%), but was slightly less consistent than the Google Docs proofreader (with a variation of 5%).

Comparison of Wordvice AI Proofreader and MS Word proofreading tool

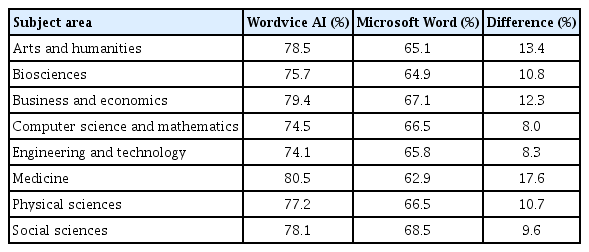

We compared the AI Proofreader’s performance in the specific academic subject area compared to Google Docs and MS Word, as listed in the Methods section (Tables 3, 5, 6). In each of the eight subject areas, the Worldvice AI Proofreader showed the highest proofreading performance, by total percentage of ground truth sentence corrections applied, at 79.4%. When compared using the GLEU method, MS Word applied the lowest amount of revision and was closest to the original source text in terms of revised to unrevised text. Of the three proofreading tools, MS Word applied the least amount of editing. Table 3 shows the comparison between performance of the Wordvice AI Proofreader and Google Docs.

Comparison of Wordvice AI Proofreader performance to Google Docs proofreader by academic subject area

Comparison of Wordvice AI Proofreader performance to Microsoft Word’s proofreader by academic subject area

The Wordvice AI Proofreader exhibited a higher performance metric over MS Word in every subject area. As illustrated in Table 4, the Wordvice AI Proofreader outperformed the MS Word proofreader by 17.6%P in the subject area of medicine and by 8.1%P in computer science and mathematics. It also exhibited an 11.4% total average performance advantage over MS Word in each subject area.

Qualitative results

Qualitative results were derived from an open dataset by applying a set of error category criteria (Table 2). These criteria are applied to the input sentences before proofreading, input sentences proofread by MS Word and Google Docs, and sentences proofread by Wordvice AI.

Criteria 1. Fluency improvement (stylistic improvement)

The Wordvice AI Proofreader improved sentence fluency by editing awkward expressions, similar to revision applied in documents edited by editing experts (“human editing”). In Table 7, “point” was used to indicate how different editing applications can interpret the intended or “correct” meaning of words that have multiple potential meanings. In the original sentence instance, “point” means pointing a finger or positioning something in a particular direction. However, “point out” means indicating the problem, and thus the original term “point” was changed to “point out” by human editing. Because our study considers the sentence revised by human editing as 100% correct, this result accurately conveys the intended meaning of the sentence—here, “point out” is more appropriate than “point.”

Google Docs applied the same correction, changing “points” to “points out.” However, it did not correct the misspelling “scond,” the intended meaning of which human editing recognized as “second.” However, Wordvice AI corrected both of these errors perfectly, following the human editor’s revisions. MS Word did not detect or correct either error in this sentence.

Criteria 2. Vocabulary improvement (stylistic improvement)

Wordvice AI Proofreader applied appropriate terminology to convey sentence meaning in the same manner as the human editor. Human editing removed the unnecessary definite article “the” from the phrase “the most countries” to capture the intended meaning of “an unspecified majority”; it also changed the phrase “functioning of the public transport” to “public transport” to reduce wordiness (Table 8).

Similarly, Wordvice AI improved the clarity of the sentence by removing the unnecessary article “the” from the abovementioned sentence. In addition, Wordvice AI was able to improve the clarity of sentences by inserting a comma and the word “completely,” neither of which revisions were made by human editing. Furthermore, neither Google Docs nor MS Word performed these or any additional revisions to the text.

Criteria 3. Determiner/article correction (objective errors)

In the grammar assessment, Wordvice AI exhibited the same level of performance as human editing. Table 9 shows that the objective errors identified and corrected by Wordvice AI were the same as those corrected by human editing. A comma is required before the phrase “in other words” to convey the correct meaning, but the comma is omitted in the original. Both the human edit and Wordvice AI edit detected the error and added a comma appropriately.

Additionally, the definite article “the” should be deleted from the original sentence because it is unnecessary in this usage, and both the human edit and Wordvice AI edit performed this revision correctly. Finally, because the human body is not composed of one bone, but multiple bones, the term “bone” should be revised to “bones.” Both Wordvice AI and the human editor recognized this error and corrected it appropriately. However, Google Docs and MS Word did not detect or correct these errors.

Criteria 4. Spelling correction (objective errors)

The ability to recognize and correct misspellings was exhibited not only by Wordvice AI, but also by all the other proofreading methods we compared (Table 10). In this original sentence, the misspelled word “becasue” should be revised to “because,” and the misspelled word “abd’ should be revised to “and.” Each of the proofreading tools accurately recognized the corresponding spelling mistakes and corrected them.

Discussion

Key results: In terms of the accurately revised text, as evaluated by the GLEU metric, Wordvice AI exhibited the highest proofreading score compared to the other proofreading applications, identifying and correcting 77% of the human editor-corrected text. The Wordvice AI Proofreader scored an average of 12.8%P higher than both Google Doc and MS Word in terms of total errors corrected. The proofreading performance of Wordvice AI (variation of 5.4%) was more consistent in terms of percentage of errors corrected compared to MS Word (variation of 5.6%) but was slightly less consistent than Google Docs (variation of 5%). These results indicate that Wordvice AI Proofreader is more thorough than these other two proofreading tools in terms of the percentage of errors identified, though it does not edit stylistic or subjective issues as extensively as the human editor.

Additionally, Wordvice AI Proofreader exhibited consistent levels of proofreading among all academic subject areas evaluated in the GLEU comparison. Variability in editing performance among these subject areas was also relatively small, with only a 6.4%P difference between the lowest and highest average editing applied compared to the human proofreader. Both Google Docs and MS Word exhibited similar degrees of variability in performance throughout all subject areas.The highest percentage of appropriate corrections recorded for these automated writing evaluation proofreaders (Google Docs: medicine 73.5%) was still lower than Wordvice Proofreader’s lowest average (medicine 80.5%).

Interpretation: The Wordvice AI Proofreader identifies and corrects writing and language errors in any main academic domain. This tool could be especially useful for researchers writing manuscripts to check the accuracy of their writing in English before submitting their draft to a professional proofreader, who can provide additional stylistic editing and. NLP applications like the Wordvice AI Proofreader may exhibit greater accuracy in correcting objective errors than more widely-used applications like MS Word and Google Docs before the input text is derived primarily from academic writing samples. Similar AI proofreaders trained on academic texts (such as Trinka and Ginger) may also prove more useful for research authors than general proofreading tools such as Grammarly, Hemingway Editor, and Ginger, among others.

Suggestion of further studies: By training the software with more sample texts, the Wordvice AI Proofreader could potentially exhibit performance and accuracy levels even closer to those of human editors. However, due to the current output limits of NLP and AI, human editing by professional editors remains the most comprehensive and effective form of text revision, especially for authors of academic documents, which require the understanding of jargon and natural expressions in English.

Conclusion: In most of the texts analyzed, the Wordvice AI Proofreader performed at or near the level of the human editor, identifying similar errors and offering comparable suggestions in the majority of sample passages. The AI Proofreader also had higher performance and greater consistency than the other two proofreading applications evaluated. When used alongside professional editing and proofreading to ensure natural expressions and flow, Wordvice AI Proofreader has the potential to improve manuscript writing efficiency and help users to communicate more effectively with the global scientific community.

Notes

Conflict of Interest

The authors are employees of Wordvice. Except for that, no potential conflict of interest relevant to this article was reported.

Funding

The authors received no financial support for this study.

Data Availability

Dataset file is available from the Harvard Dataverse at: https:// doi.org/10.7910/DVN/KZ1MYX

Dataset 1. Eight hundred sentence pairs out of 1,501 from JHU FLuency-Extended GUG (JFLEG) open dataset, which were used for assessing improvements in textual fluency (https://github.com/keisks/jfleg).

Dataset 2. Four hundred forty-five sentences from eight academic domains, derived from Wordvice’s academic document data: arts and humanities, biosciences, business and economics, computer science and mathematics, engineering and technology, medicine, physical sciences, and social sciences.

Dataset 3. One thousand two hundred forty-five sentences composed of 800 JFELG data and 445 academic sentence data edited by human editing experts.

Dataset 4. One thousand two hundred forty-five sentences composed of 800 JFELG data and 445 academic sentence data edited by Wordvice AI.

Dataset 5. One thousand two hundred forty-five sentences sentences composed of 800 JFELG data and 445 academic sentence data edited by MS-Word.

Dataset 6. One thousand two hundred forty-five sentences composed of 800 JFELG data and 445 academic sentence data edited by Google Docs.