An algorithm for the selection of reporting guidelines

Article information

Abstract

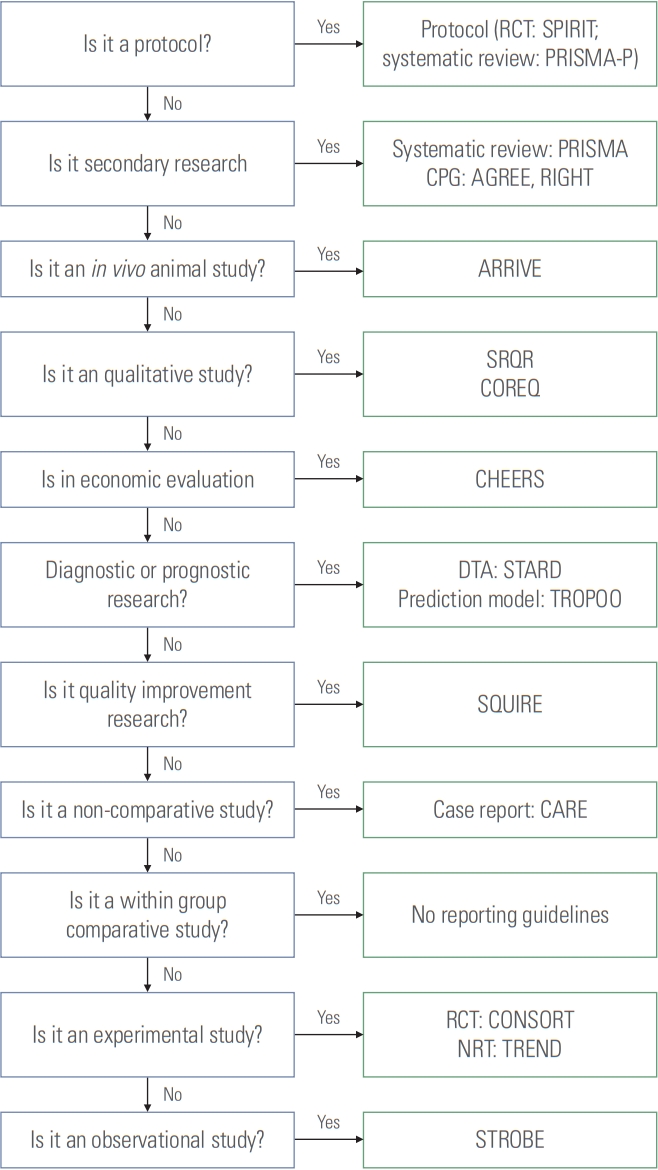

A reporting guideline can be defined as “a checklist, flow diagram, or structured text to guide authors in reporting a specific type of research, developed using explicit methodology.” A reporting guideline outlines the bare minimum of information that must be presented in a research report in order to provide a transparent and understandable explanation of what was done and what was discovered. Many reporting guidelines have been developed, and it has become important to select the most appropriate reporting guideline for a manuscript. Herein, I propose an algorithm for the selection of reporting guidelines. This algorithm was developed based on the research design classification system and the content presented for major reporting guidelines through the EQUATOR (Enhancing the Quality and Transparency of Health Research) network. This algorithm asks 10 questions: “is it a protocol,” “is it secondary research,” “is it an in vivo animal study,” “is it qualitative research,” “is it economic evaluation research,” “is it a diagnostic accuracy study or prognostic research,” “is it quality improvement research,” “is it a non-comparative study,” “is it a comparative study between groups,” and “is it an experimental study?” According to the responses, 16 appropriate reporting guidelines are suggested. Using this algorithm will make it possible to select reporting guidelines rationally and transparently.

Introduction

The IMARD (introduction, methods, results, and discussion) is the most commonly used document format when writing scientific articles. In the introduction, the reason and purpose of the study are usually reported. In the methods section, the time, place, process, materials, and participants of the study are described. The answer to the research question and the meaning/impact of the current results are reported in the results and discussion section. In other words, scientific papers should include an appropriate report of the purpose, as well as information about the validity, usefulness, and meaning of the research [1]. There are many cases in which improper reporting (underreporting, Misreporting, and selective reporting) occurs in actual papers, lowering the validity of the research [2].

The purpose of reporting guidelines is to reduce these problems, and a reporting guideline can be defined as “a checklist, flow diagram, or structured text to guide authors in reporting a specific type of research, developed using explicit methodology” [3]. Reporting guidelines were actively developed after the publication of CONSORT (Consolidated Standards of Reporting Trials), a reporting guideline for randomized controlled trials (RCTs), and there are now more than 500 reporting guidelines that can be used by research authors in the medical field. Almost all reporting guidelines are searchable and available through the EQUATOR (Enhancing the Quality and Transparency of Health Research; http://www.equator-network.org) network.

Researchers are the main users of the reporting guidelines, which can be utilized when writing manuscripts and protocols. Numerous reporting guidelines have been developed, and it has become important to select the most appropriate reporting guideline for a manuscript to be reviewed. However, a system that recommends appropriate reporting guidelines through tools such as algorithms is not yet available. The purpose of this study is to suggest an algorithm for selecting reporting guidelines.

Background for an Algorithm for the Selection of a Reporting Guideline

The currently developed reporting guidelines do not apply to all scientific studies. The EQUATOR network specifies the scope of reporting guidelines as health research. However, a classification of the developed reporting guidelines indicates that the actual scope includes human subjects and in vivo animal experiments. Of course, these reporting guidelines can also be applied even to studies without human subjects, as long as they are conducted using the same methodology as in human subject research. For example, a study of the data-sharing policies of academic journals could be reported using the STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) guideline for reporting observational research, even though it is not a human subject study, since it can be viewed as a cross-sectional study. In the future, the scope of reporting guidelines may be expanded to other scientific fields.

Since the reporting guidelines developed to date deal with human subject research and in vivo animal experiments, an algorithm for selecting appropriate reporting guidelines can be suggested through a few questions.

Questions in the Algorithm for the Selection of Reporting Guidelines

Preliminary consideration

As mentioned above, since the reporting guidelines are limited to human studies and in vivo animal studies, it is necessary to review whether the research design of the manuscript under consideration corresponds to a human study or an in vivo animal study. If the answer to this question is “no,” then no reporting guidelines have been developed to date. If the answer to the preliminary consideration is “yes,” then an appropriate reporting guideline can be selected through the questions below.

• Is it a protocol?

• Is it secondary research?

• Is it an in vivo animal study?

• Is it qualitative research?

• Is it economic evaluation research?

• Is it a diagnostic accuracy study or prognostic research?

• Is it quality improvement research?

• Is it a non-comparative study?

• Is it a comparative study between groups?

• Is it an experimental study?

Is it a protocol?

In health research, a protocol is a written research plan. In the medical field, protocols are mainly prepared when conducting clinical trials or systematic reviews. The main reporting guideline for clinical trials is SPIRIT (Standard Protocol Items: Recommendations for Interventional Trials) [4], and the main reporting guideline for systematic literature reviews is PRISMA-P (Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols) [5].

Is it secondary research?

Research can be divided into primary and secondary research. Primary research is a research approach that directly collects data, and secondary research is a research approach that relies on existing data when conducting systematic investigations [6]. The main type of secondary research conducted in the medical field encompasses systematic literature reviews and clinical practice guidelines. The reporting guidelines suitable for systematic literature reviews are based on the PRISMA guideline [7]. Various extensions exist for PRISMA, including PRISMA-DTA (PRISMA for Diagnostic Test Accuracy) [8], PRISMA-ScR (PRISMA Extension for Scoping Reviews) [9], and PRISMA-S (PRISMA Extension for Reporting Literature Searches in Systematic Reviews) [10]. The reporting guidelines suitable for clinical practice guidelines are AGREE (Appraisal of Guidelines, Research and Evaluation) [11] and RIGHT (Reporting Tool for Practice Guidelines in Health Care) [12].

Is it an in vivo animal study?

Animal studies include in vitro studies and in vivo studies. The term in vitro, which means “in glass” in Latin, describes diagnostic procedures, scientific tests, and experiments that are carried out by researchers away from a living thing. An in vitro experiment takes place in a sterile setting, such as a test tube or Petri dish. The Latin term in vivo means “among the living.” It describes procedures, tests, and examinations that scientists carry out in or on a complete living organism, such as humans or laboratory animals [13]. In general, there are no appropriate reporting guidelines for in vitro studies, while the ARRIVE (Animal Research: Reporting of In Vivo Experiments) reporting guideline exists for in vivo studies [14].

Is it qualitative research?

Quantitative research deals with numbers and statistics when gathering and analyzing data, whereas qualitative research deals with words and meanings. The results of qualitative research are written to aid in understanding ideas, experiences, or concepts. A researcher can gain comprehensive knowledge on poorly understood subjects through this type of research. Common qualitative techniques include open-ended questions in interviews, written descriptions of observations, and literature reviews that examine concepts and theories [15]. Two major reporting guidelines for qualitative research are SRQR (Standards for Reporting Qualitative Research) [16] and COREQ (Consolidated Criteria for Reporting Qualitative Research) [17].

Is it economic evaluation research?

Economic evaluation research can be defined as “the process of systematic identification, measurement and valuation of the inputs and outcomes of two alternative activities, and the subsequent comparative analysis of these” [18]. For economic evaluation studies, the most appropriate reporting guideline is the CHEERS 2022 (Consolidated Health Economic Evaluation Reporting Standards 2022) [19].

Is it a diagnostic accuracy study or prognostic research?

A diagnostic test accuracy study provides evidence on how well a test correctly identifies or rules out disease and informs subsequent decisions about treatment for clinicians, their patients, and healthcare providers who interpret diagnostic accuracy studies for patient care [20]. The reporting guideline used to report research on diagnostic accuracy is STARD (Standards for Reporting Diagnostic Accuracy Studies) [21].

In general, prognosis-related papers should be reported according to the observational study reporting guideline (STROBE), but in the case of a prognostic prediction model, it should be reported according to TRIPOD (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis) [22].

Is it quality improvement research?

Quality improvement is the framework used to systematically improve care. To reduce variation, achieve predictable results, and improve outcomes for patients, healthcare systems, and organizations, quality improvement aims to standardize processes and structure [23]. For quality improvement studies, the most appropriate reporting guideline is SQUIRE (Standards for Quality Improvement Reporting Excellence) [24].

If the answer to question 8 is “no,” the study design is an interventional study. An interventional research design is classified according to the questions of DAMI (Design Algorithm for Medical Literature on Intervention) [25].

Is it a non-comparative study?

According to the DAMI tool, the first question to ask is whether a study is analytical or descriptive. DAMI asks this question: “Were the primary outcomes compared according to intervention/exposure or the existence of a disease?” [25]. If the answer is “no,” a study is descriptive, and the corresponding research design is a case report and a case series. Case reports and case series are generally classified according to the number of reported cases, and studies reporting three or more cases are classified as case series [26].

For case reports, the representative reporting guideline is the CARE (Case Report Guidelines) [27]. There are no leading reporting guidelines for case series. However, since case series are mainly published in surgical journals, it is possible for them to use reporting guidelines developed for various surgical fields, including general surgery (the PROCESS [Preferred Reporting of Case Series in Surgery] guideline) [28], as well as case group study reporting guidelines in the field of plastic surgery [29].

Is it a comparative study between groups?

Comparative studies can be divided into within-group and between-group comparative studies. A within-group comparison refers to repeated measurements of the primary outcome among the same individuals or group at different time points [25]. DAMI’s question on this is, “Were the primary outcomes of different groups compared?” Research designs that correspond to a “yes” response to this question include before-after studies and interrupted time series research. Currently, there are no clear reporting guidelines for within-group comparative studies.

Is it an experimental study?

If the investigators determined study participants’ exposure to interventions, then the study is classified as an experimental study. In such studies, investigators directly control the intervention time, process, and administration. If the study participants are exposed to specific interventions without the direct control of investigators, then the study is classified as observational [25]. DAMI’s question on this is, “Did the investigators allocate study participants to each group?” If the answer to this question is “yes”, a study is classified as experimental, and if the assignment is randomized (“Was the group randomized?”), it is classified as a RCT. For RCTs, the most commonly used reporting guideline is the CONSORT 2010 Statement [30]. There are 33 extensions of CONSORT. Widely known and used examples include the reporting guidelines for clinical trials related to COVID-19 (the CONSERVE 2021 Statement) [31], RCTs related to artificial intelligence (CONSORT-AI Extension) [32], and RCTs conducted using cohorts and routinely collected data (CONSORT-ROUTINE) [33].

There is no reporting guideline for nonrandomized clinical trials in general, although the TREND (Transparent Reporting of Evaluations with Nonrandomized Designs) statement exists for use in behavioral and public health intervention clinical trials [34]. For observational studies, the STROBE statement should be used as a reporting guideline [35]. When using STROBE, an appropriate sub-checklist should be used in accordance with the representative observational study design (cohort studies, case-control studies, and cross-sectional studies). The DAMI question corresponding to cross-sectional studies is, “Were the data for exposure to the intervention and for primary outcomes collected concurrently?” The question that distinguishes cohort studies from case-control studies is, “Was each group organized on the basis of exposure to the intervention?”

Algorithm for Selecting Reporting Guidelines

Based on the answers to the above questions, an algorithm was constructed, as shown in Fig. 1. This algorithm should only be used for studies of human subjects or in vivo animal studies.

Algorithm for the selection of reporting guidelines. RCT, randomized controlled trial; SPIRIT, Standard Protocol Items: Recommendations for Interventional Trials; PRISMA-P, Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols; PRISMA, Preferred Reporting Items for Systematic Review and Meta-Analysis; CPG, clinical practice guideline; AGREE, Appraisal of Guidelines for Research and Evaluation; RIGHT, Reporting Tool for Practice Guidelines in Health Care; ARRIVE, Animal Research: Reporting of In Vivo Experiments; SRQR, Standards for Reporting Qualitative Research; COREQ, Consolidated Criteria for Reporting Qualitative Research; CHEERS, Consolidated Health Economic Evaluation Reporting Standards; DTA, diagnostic test accuracy; STARD, Standards for Reporting Diagnostic Accuracy Studies; TRIPOD, Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis; SQUIRE, Standards for Quality Improvement Reporting Excellence; CARE, Case Report Guidelins; CONSORT, Consolidated Standards of Reporting Trials; NRT, nonrandomized trial; TREND, Transparent Reporting of Evaluations with Nonrandomized Designs; STROBE, Strengthening the Reporting of Observational Studies in Epidemiology.

Conclusion

Peer reviewers, authors, and journals frequently use reporting guidelines. Reporting guidelines raise the standard of research that is published in biomedical journals. To make the best possible use of reporting guidelines, it is necessary to select the appropriate reporting guideline for a given study. The algorithm for selecting reporting guidelines presented in this paper will be helpful for this purpose. If the research designs and scope of research to which reporting guidelines are applied are expanded, the algorithm will also need to be updated.

Users must take care to ensure that the numerous new reporting guidelines are developed with the same level of scrutiny and rigor as more established guidelines and that the interventions that result are meaningful.

Notes

Conflict of Interest

No potential conflict of interest relevant to this article was reported.

Funding

The author received no financial support for this article.